PIMS Industrial Problem Solving Workshop

Topic

Details

The aim of the PIMS Industrial Problem Solving Workshop is to create a mutually beneficial link between researchers in industry and academic mathematicians/statisticians. Research workers with industrial and commercial concerns are invited to present one of their current technical problems. Leading specialists from the academic community study these problems in teams during the week-long workshop, and present the results of their study back to the industrial participants at the end of the week.

[+]Design of a four-qubit quantum gate

Mentors

- Ehsan Zahedinejad

- Barry Sanders

Preparation

- Linear Algebra

- Hilbert Space

- Non-convex optimization

- Numerical programming

Quantum Computing

Quantum computing enables efficient algorithms for problems that are intractable on non-quantum computers. The power of quantum computing arises by representing and processing information in superpositioned states. One of the most important problems amenable to exponential speedup is the hidden Abelian subgroup problem, with factorization as an iconic example.

A quantum computing algorithm can be represented by a unitary matrix U of size 2n with n being the number of qubits (quantum bits). Designing a procedure for calculating the output by applying U on the input state involves a decomposition into a concatenation of gates that act on a small number of qubits, typically using 2x2 and 4x4 unitary matrices tensored with the identity. A major goal in experimental quantum computing is to realize well-functioning gates of these types to serve as building blocks for large-scale quantum computers.

Performance of a quantum computer can be enhanced by realizing quantum gates that act on many qubits at once. One important strategy for realizing a gate acting on a few qubits is to exploit additional energy levels beyond the two levels that serve as the basis states for each qubit. If the physical entity holding the qubit has several levels, quantum control can be employed to steer the evolution through the larger-dimensional Hilbert space spanned by the multiple energy levels of all the objects.

Problem Description

We have successfully designed a procedure for realizing three-qubit quantum gates in four-level systems via quantum control. Such a design appears to be realizable in a superconducting quantum circuit architecture. Our goal is to extend the approach to design a four-qubit gate via quantum control. Such a gate is vital for coding quantum states to realize scalable fault-tolerant quantum computing.

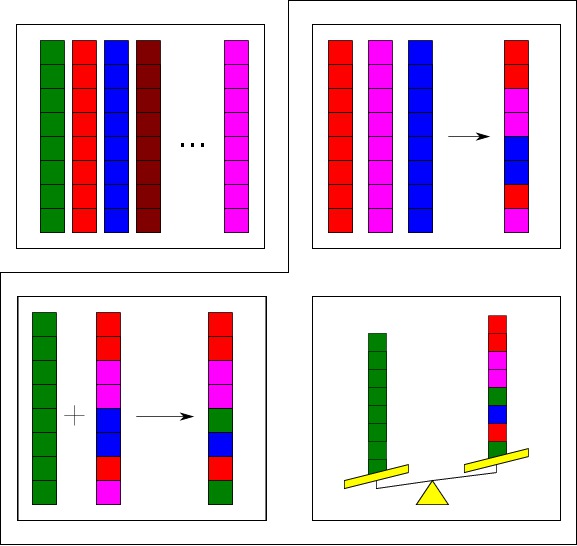

The four-qubit gate design is quite hard to design because these qubits are four-dimensional systems, Optimization is required over a Hilbert space of 44=256 dimensions. In comparison, the three-qubit gate design required optimization over a Hilbert space of only 43=64 dimensions. Even for that a 64-dimensional Hilbert space, we had to construct a modified reinforcement learning algorithm to succeed with optimizing. Our algorithm was based on the differential evolution optimization algorithm (Fig. 1) as the usual convex optimization routines do not succeed for such gates.

Figure 1: Schematic representation of the Differential Evolution algorithm. Each chromosome is a vertical block and initialized to random values in the search space (top left). For each chromosome, three random chromosomes are chosen to be parents of a donor chromosome comprising random data from each parent (top right). This donor chromosome is crossed randomly with the original chromosome to create a trial chromosome (bottom left), which is compared with the original (bottom right), and the fitter chromosome is retained for the next iteration. The dashed line represents a single iteration of the differential algorithm.

The four-qubit gate is much more challenging. We anticipate that superior optimization algorithms will be required in 256 dimensions, and we expect that four-qubit gate design will involve clever optimization strategies.

[+]Microseismic hypocentre location, via distributed acoustic

Mentors

- Matt McDonald

Preparation

- Optimization

- Partial differential equations

- Wave propagation

- Numerical modelling

- Seismology

Distributed acoustic sensing

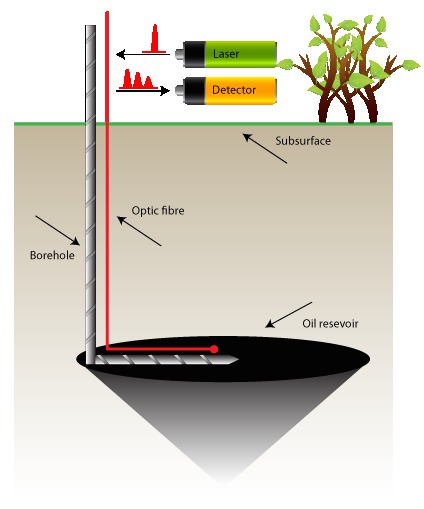

Distributed acoustic sensing (DAS) is a relatively new technique used for measuring strain in down-hole applications in oil and gas. The sensor consists of a single-mode fibre optic cable installed along the length of a well borehole, and connected to a laser and photodetector. A pulse of light is sent down the fibre, and the reflections scattered back to the detector are analyzed to measure strain on the fibre. Depending on the length of the fibre, the laser may be fired many thousands of times per second, and the Rayleigh backscatter sampled to produce a measurement of the strain at numerous points on the fibre. Knowledge of the refractive index of the fibre allows one to use two-way travel-time to treat the fibre as a series of discrete sensors up to 40 km long, where each position acts somewhat like a two-beam interferometer.

Figure 1: DAS with fibre in borehole

In terms of typical data volumes collected, a 5 km fibre with a spatial sampling interval of 0.67 meters would translate to roughly 7462 fibre-positions being sampled 20,000 times per second. The signals can be treated as the output of a long string of geophones distributed along the bore hole, with a high frequency sampling rate.

Problem Description

In current oil recovery technologies, it is important to monitor the production process by tracking events underground, such as fracturing and fluid flow. This monitoring can be done by conventional seismic, but this can be challenging given the noisy acoustic environment during well operation.

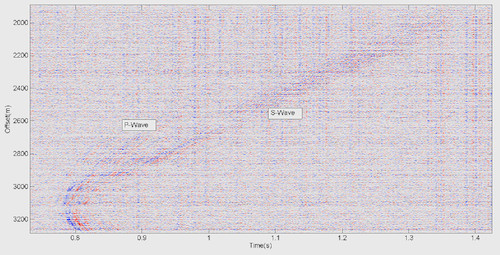

One advantage of distributed acoustic sensing (DAS) over traditional point sensors is that armoured fibre-optic cables may be permanently attached to the outside of a well and used to monitor strain energy during operations and production. Much success has been had in monitoring hydraulic fracturing, using the fibre response as an indication of fracture effectiveness and relative fluid placement. However, due to the inherently single-component nature of DAS, it is inappropriate for use in traditional methods for determining fracture location (hypocentres) within the reservoir, despite the appearance of both P and S waves in the data. Figure 2 shows an example of a fracture event recorded by DAS during fracture operations.

Figure 2: P and S waves in DAS data

The proposed problem is to determine how single-component DAS data could be used to provide information about fracture hypocentres. One possible solution would be, given a velocity model, to minimize the residual between the arrival times of the wave-fronts in the data and those computed from the Eikonal equations for the model.

[+]Service Pricing to Value Received

Mentors

- David Callele (Ag Exchange Group, Inc.)

Preparation

- Linear Methods

- Optimization

- Game Theory

- Robust Estimation in Uncertainty

Buying and selling on the grain markets

Farmers (Growers) grow grain and sell their inventory for delivery to local terminals. From there, the grain is shipped to destination markets. Grain Buyers typically purchase grain from a given region for delivery to a given terminal, and the price offered to a Grower is a function of many factors. Furthermore, the transaction is usually mediated by a Broker. Both Grower and Buyer typically pay commissions of up to 2% to the Broker. The Grower pays to get their inventory sold on their behalf; the Buyer pays to get access to the inventory.

Figure 1: Grain is delivered to local terminals, a factor in the price.

Ag Exchange Group, Inc. provides a data service that helps Buyers to directly find grain inventory held by Growers and to make Bids upon that inventory. This data service has value, and our challenge is to identify a simple and transparent service pricing algorithm that reflects the value of the data to the Buyers. Growers are assumed to sign up and pay for the service under a previously defined flat-fee schedule; so we do not need to consider a service pricing algorithm for them.

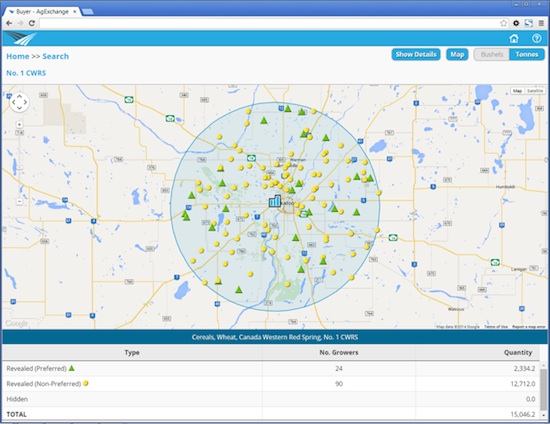

Figure 2: Purchasing is typically (but not always) performed using a radius-based serving area.

As noted above, a given Buyer purchases grain for delivery to a terminal and each terminal typically operates using a radius-based serving area (Figure 2).

There are many factors that influence the value of the bid, including the number of growers on the data service, historical and projected inventory trends, quality and quantity of the commodity, transportation costs, etc.

Problem Description

The Buyer's perception of the value of the data service has many factors such as (not comprehensive):

- Cost comparison to alternatives such as paying commissions to Brokers. This represents a hard ceiling on the value of the service that is equivalent to the Broker's commissions.

- Reduction in workload, improved efficiencies when sourcing commodities.

- Improved market intelligence such that new opportunities can be pursued.

Our problem is to maximize the amount that we can charge for access to the data service while presenting a supportable argument as to why we are a more cost-effective solution to meeting the Buyer's needs. We must also be able to justify variable pricing for different Buyers -- why are the fees different for each Buyer?

As shown above, the number of dimensions that can affect the value is significant.

Our questions include

- What are reasonable assumptions for each dimension that can be used to constrain the search space? For example, Growers typically want to deliver to a terminal within an hour (note time and not absolute distance) of their storage units.

- What techniques(s) can be used to find a solution to the problem?

- For a given technique, how do we determine which dimensions are the most important?

- If we had an accurate representation for each dimension, does optimization yield a global solution to the pricing question or are there many localized solutions? And, for localized solutions, can we quantify how many local solutions exist?

- Given the answer to the question about the number of solutions, is there a (greatly) simplified representation of the "pricing equation" that is easier for the Buyer (customer) to understand? A representation that the Buyer can explicitly or intuitively understand that approximates the optimal answer?

[+]What is a successful advertising campaign?

Mentors

- Briana Brownell (Insightrix)

Preparation

- Statistics

- Numerical data collection and analysis

- Survey methods

- Statistical Software

Measuring advertisement effectiveness

Advertising and marketing are a considerable expense for most companies. To show the return on investment (ROI), companies often seek some kind of measurement to assess their performance. Such measurements are notoriously difficult and present a complex optimization problem that has not yet been solved. With an increasingly fractured market, it is more and more difficult to provide a valid and reliable benchmark for advertising performance. Moreover, with changes in the way people see (or don't see) advertisements on new media such as pay-per-view, internet, and mobile apps, there are additional complications because of the overlap of the various media and difficulty gathering statistics for some media.

Figure 1: Are ads effective or not? and how is this measured?

Traditionally, the following statistics are commonly used to measure aspects of the "success" of an advertisement:

- Prompted recall: percentage of consumers who claim to have seen the ad.

- Brand link: percentage of those who can link the ad to a brand.

- Message comprehension: how well the ad viewers understood what the ad was for.

- Wear-out: how many viewers are getting tired of the ad.

- Relevance: does the ad have meaning personally to the viewer.

- Interest: does the ad increase the viewer's interest in the brand.

- Enjoyability: does the viewer enjoy watching the ad.

Notice that many of these statistics build through time over the course of an advertisement campaign, adding to the complexity of measurement.

Problem Description

Can an alternative, accurate, and statistically valid measurement for data gathered from several tracking surveys be created, for the following purposes:

- Benchmarking: how successful was the advertisement?

- The methodology must be comparable across time periods

- Companies must be able to compare the success of several executions (ads)

- Companies must be able to compare their performance against competitors as well as in-market companies in different industries

- Return on investment: was the success cost-effective?

- What is the optimal Gross Rating Point (GRP) spend relative to the cost of advertising? (GRP: Number of individuals exposed $\times$ number of times exposed)

- How do we measure GRP in relation to negative responses like wear-out?

- Do these factors differ by advertising medium?

The project sponsor, Insightrix, will provide a range of survey data for analysis, including weekly data in a cross-sectional survey design (one brand over five years, on TV, print, billboard, online), and monthly data (current in-market advertisements over two years).

[+]Optimal scheduling for mining production

Preparation

- Numerical optimization

- Linear programming

- Scheduling

- Discrete Mathematics

- Software

Overview

Managing a network of mines is a complex operation. Each mine has its own unique character, able to produce a range of products in a variety of capacities, some mines with space for on-site inventory storage, other mines with processing facilities to refine product into other marketable items, each mine with its unique transportation access and costs, and a variety of constraints on plant operations. Scheduling of shut-downs and starts-up of each mine can be a key step in determining the network operations. Ultimately the goal of a commercial mining operation is to maximize profits over a sustained period of time, taking into considerations the constraints of operation while responding to opportunities in the marketplace. A successful company relies on the experience and expertise of its managers to find reliable methods to optimize these operations.

Figure 1: A commercial mine is a complex operation.

Problem Description

Is there a general methodology or algorithm to reliably optimize operations of a network of mines, in order to maximize profit over a sustained period? It would need to account for specific characteristics of each mine (e.g. products produced by that mine, in what capacity, at what cost, with what inventory available), constraints such as restrictions on length of shutdown for a particular mines, required down-time for maintenance, addition of new mines to the network, unexpected events such as accidents leading to mine closure, and random market fluctuations. The successful methodology will have to function over an operational period of many years, with the possibility of adaptation over the operational period.

Data

The sponsoring company will provide extensive data on the details of the mining operations: products produced at each mine, production capacity and costs, shutdown restrictions, transportation capacity and costs, estimates of market demands and variability, etc. The goal is to create a solution for an actual mining operation managed by this Canadian company.

Additional Information

Registration:

To register for this event, please complete the REGISTRATION FORM. Your registration will be assessed and you will be contacted once registration closes.

Survey:

Please help PIMS to improve the quality of its events and plane for the future by filling out this quick and painless survey.

Final scientific report available here.